How useful LLMs are to the Search & Recommender Systems?

Notes on "Tuning LLMs for Recommendation Tasks"

Notes taken from Tuning Large Language Models for Recommendation Tasks.

LLM is no longer a “hype”

Ever wondered in the era of LLMs, how do we leverage its power in the search and recommender systems (S&R) ? I’ve taken some notes from a few key essays and hopefully can help create some inspirations.

Firstly, let's explore the usefulness of LLMs in the Search and Recommender System.

Some recommended pre-readings

Several ways to leverage LLMs in the S&R systems

S&R with ChatGPT

Now, let's consider the pros and cons of using LLMs for S&R.

Pros:

LLMs are helpful for cold-start scenarios with zero-shot or few-shot capabilities.

LLMs allow for more flexible inputs (e.g., sentences vs. keyword-only search), enabling users to provide more context.

LLMs offer explainability.

Cons

Hallucinations: LLMs may recommend non-existing or unexpected items.

Data security: How can we ensure that platform data is not leaked to LLM vendors?

LLMs lack an explicit recommendation objective, which may result in lower performance compared to traditional ranking models.

Limited context length may hinder the incorporation of large user sequence information.

How can we address these limitations?

P5 model to fine-tune with user behavior data to combine private domain with universal knowledge.

cons: difficulty to understand semantic meaning of the IDs.

A few ways to implement instruction tuning to help LLMs understand user/item IDs.

a tuning framework to align LLMs with Recommendations

Flan-T5 Tuned for Recommendation by Google Brain

showed that zero-shot performs worse than traditional model

fine-tuned LLM achieves comparable or even better performance using small fraction of training data

InstructRec by WeChat

introduced 39 coarse-grained instruction templates to cover all interation scenarios such as recommendation, retrieval and personalized search

RecLLM by Google Research

proposed a roadmap for building an e2e conversational recommender system (CRS) using LLMs

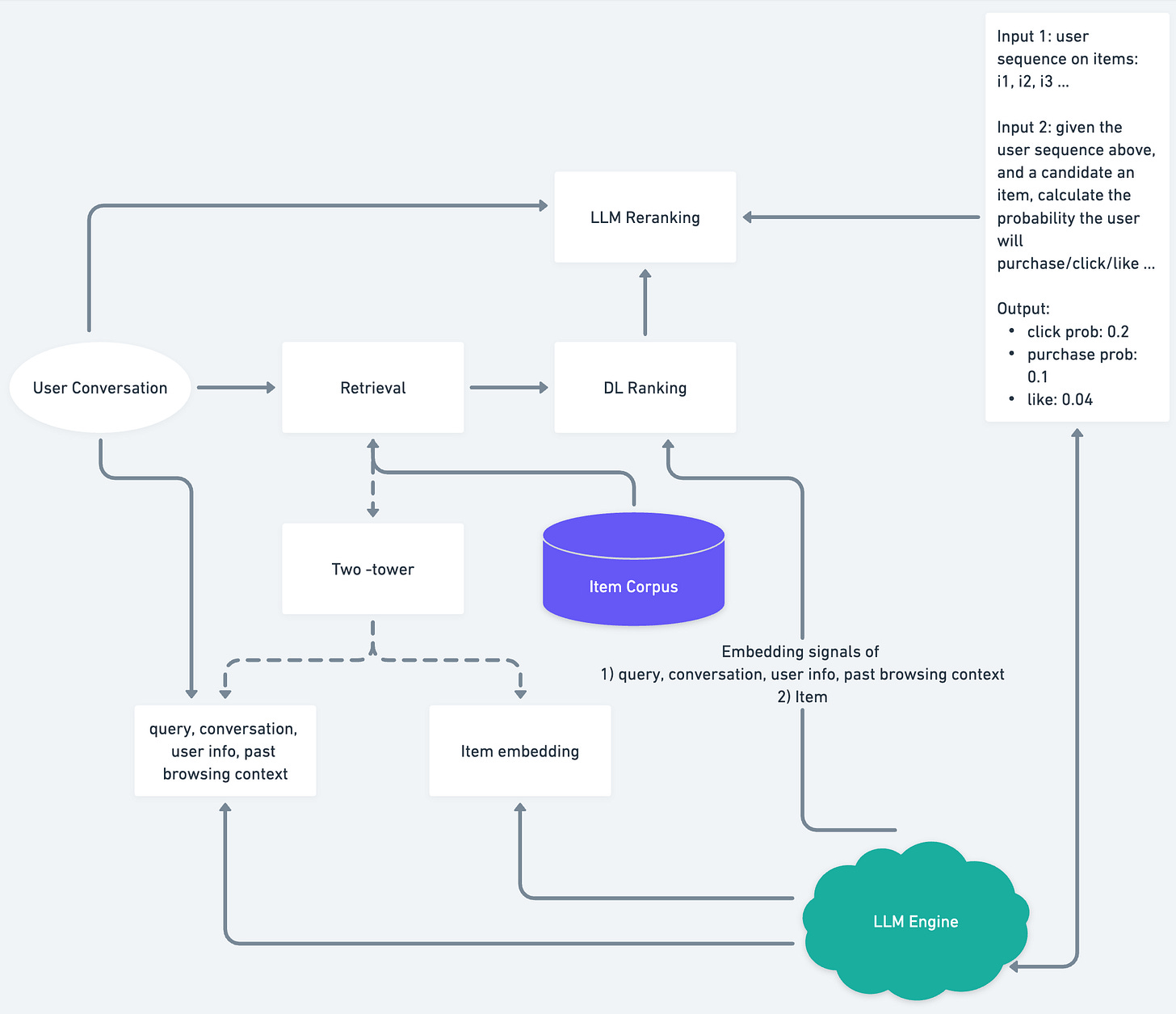

Future of Search architecture — a proposal

Below, I present a first version of the search architecture. The difference is highlighted around the LLM engine.

Please let me know your thoughts, as I am sure it will need to be revised in the future!